A Balanced Introduction to Computer Science and Programming

David Reed

Creighton University

Copyright © 2004 by Prentice Hall

Chapter 6: The History of Computers

Where a calculator on the ENIAC is equipped with 18,000 vacuum tubes and

weighs

30 tons, computers in the future may have only 1,000 vacuum tubes and weigh

only 1

1/2

tons.

Popular Mechanics, 1949

Never trust a computer you can't lift.

Stan Mazor, 1970

If the automobile had followed the same development cycle as the computer,

a Rolls-Royce would today cost $100, get one million miles to the gallon,

and explode once a year, killing everyone inside.

Robert X. Cringely

Computers are such an integral part of our society that it is sometimes

difficult to imagine life without them. However, computers as we know them

are relatively new devices, with the first electronic computers dating back

to the 1940's. Since that time, technology has advanced at an astounding rate,

with the capacity and speed of computers approximately doubling every two years.

Today, pocket calculators have many times the memory capacity and processing

power of the mammoth computers of the 1950's and 1960's.

This chapter presents an overview of the history of computers and computer

technology. The history of computers can be divided into generations, roughly

defined by technological advances that led to improvements in design,

efficiency, and ease of use.

Generation 0: Mechanical Computers (1642-1945)

Pascal's calculator

Pascal's calculator

|

The first working calculating machine was invented in 1623 by German inventor

Wilhelm Schickard (1592-1635), although details of its design were lost in a

fire soon after its construction. In 1642, the French scientist Blaise Pascal

(1623-1635) built a mechanical calculator when he was only 19. His machine

used mechanical gears and was powered by hand. A person could enter numbers

up to eight digits long using dials and then turn a crank to either add or

subtract. Thirty years later, the German mathematician Gottfried Wilhelm von

Leibniz (1646-1716) generalized the work of Pascal to build a mechanical

calculator that could also multiply and divide. A variation of Leibniz's

calculator, built by Thomas de Colmar (1785-1870) in 1820, was widely used

throughout the 19th century.

Jacquard's loom

Jacquard's loom

|

While mechanical calculators grew in popularity in the early 1800's, the first

programmable machine built was not a calculator at all, but a loom. Around 1801,

Frenchman Joseph-Marie Jacquard (1752-1834) invented a programmable loom that

used removable punched cards to represent patterns. Before Jacquard's loom,

producing tapestries was complex and tedious work. In order to produce a

pattern, different colored threads (called wefts) had to be woven over and

under the cross-threads (called warps) to produce the desired effect. Jacquard

devised a way of encoding the patterns of the threads using metal cards with

holes punched in them. When a card was fed through the machine, hooks passed

through the holes to selectively raise warp threads, and so produce the

desired over-and-under pattern. The result was that complex brocades could

be encoded using the cards, and then reproduced exactly. Simply by changing

the cards, the same loom could be used to automatically weave different patterns.

Babbage's Difference Engine

Babbage's Difference Engine

|

The idea of using punched cards for storing data was adopted in the mid 1800's

by the English mathematician Charles Babbage (1791-1871). In 1821, he proposed

the design of his Difference Engine, a steam-powered mechanical calculator for

finding the solutions to polynomial equations. Although a fully functional

model was never completed due to limitations in 19th century manufacturing

technology, a prototype that punched output onto copper plates was built and

used in computing data for naval navigation. Babbage's work with the Difference

Engine led to his design in 1833 of a more powerful machine that included many

of the features of modern computers. His Analytical Engine was to be a

general-purpose, programmable computer that accepted input via punched cards

and printed its output on paper. Similar to the design of modern computers,

the Analytical Engine was to be made of integrated components, including a

readable/writeable memory for storing data and programs (which Babbage called

the store), and a control unit for fetching and executing instructions (which

he called the mill). Although a working model of the Analytical Engine was

never completed, its innovative and visionary design was popularized by the

writings and patronage of Augusta Ada Byron, Countess of Lovelace (1815-1852).

Hollerith's machine

Hollerith's machine

|

Punch cards resurfaced in the late 1800's in the form of Herman Hollerith's

tabulating machine. Hollerith invented a machine for sorting and tabulating

data for the 1890 U.S. Census. His machine utilized punch cards to represent

census data, with specific holes on the cards representing specific information

(such as male/female, age, home state, etc.) Recall that in Jacquard's loom,

the holes in punch cards allowed hooks to selectively pass through and raise

waft threads. In the case of Hollerith's tabulating machine, metal pegs

passed through holes in the cards, making an electrical connection with a

plate below that could be sensed by the machine. By specifying the desired

pattern of holes, the machine could sort or count all of the cards

corresponding to people with given characteristics (such as all men, aged

30-40, from Maryland). Using Hollerith's tabulating machine, the 1890 census

was completed in six weeks (compared to the 7 years required for the 1880

census). Hollerith founded the Tabulating Machine Company in 1896 to market

his machine. Eventually, under the leadership of Thomas J. Watson, Sr.,

Hollerith's company would become known as International Business Machines (IBM).

electromagnetic relay

electromagnetic relay

|

It wasn't until the 1930's and the advent of electromagnetic relays that

computer technology really started to develop. An electromagnetic relay is

a mechanical switch that can be opened and closed by an electrical current

that magnetizes it. The German engineer Konrad Zuse (1910-1995) is credited

with building the first computer using relays in the late 1930's. However,

his work was classified by the German government and eventually destroyed

during World War II, and so did not influence other researchers. In the late

1930's, John Atanasoff (1903-1995) at Iowa State and George Stibitz (1904-1995)

at Bell Labs independently designed and built automatic calculators using

electromagnetic relays. In the early 1940's, Howard Aiken (1900-1973) at

Harvard rediscovered the work of Babbage and began applying some of Babbage's

ideas to modern technology. Aiken's Mark I, built in 1944, may be seen as an

implementation of Babbage's Analytical Engine using relays.

In comparison with modern computers, the speed and computational power of

these early computers may seem primitive. For example, the Mark I computer

could store only 72 numbers in memory, although it could store an additional

60 constants through manual switches. The machine could perform 10 additions

per second, but required up to 6 seconds to perform a multiplication and 12

seconds to perform a division. Still, it was estimated that complex calculations

could be completed 100 times faster using the Mark I as opposed to existing

technology at the time.

Generation 1: Vacuum Tubes (1945-1954)

vacuum tubes

vacuum tubes

|

While electromagnetic relays were certainly much faster than wheels and gears,

they still required the opening and closing of mechanical switches. Thus,

computing speed was limited by the inertia of moving parts. Relays also tended

to be cumbersome and had a tendency to jam. A classic example of this is an

incident involving the Harvard Mark II (1947), in which a computer failure was

eventually traced to a moth that had become wedged between relay contacts.

Grace Murray-Hopper (1906-1992), who was on Aiken's staff at the time, taped

the moth into the computer logbook and facetiously noted the "First actual

case of bug being found."

In the mid 1940's, electromagnetic relays began to be replaced by vacuum

tubes, resulting in machines whose only moving parts were electrons. Invented

by Lee de Forest (1873-1961) in 1906, a vacuum tube is a small glass tube

from which all or most of the gas has been removed, permitting electrons to

move with minimal interference from gas molecules. Since vacuum tubes have no

moving parts (only the electrons move), they permitted the switching of

electrical signals at speeds far exceeding those of any mechanical device.

In fact, vacuum tubes were up to 1,000 times faster than electromagnetic

relays. They could also be used to construct fast storage devices.

COLOSSUS

COLOSSUS

|

The stimulus for the electronic (vacuum tube) computer was World War II. The

first electronic computer was COLOSSUS, built by British government to decode

encrypted Nazi communications. COLOSSUS was designed in part by the British

mathematician Alan Turing (1912-1954), who is considered one of the founding

fathers of computer science due to his seminal work in the theory of

computability and artificial intelligence. While operational in 1943,

COLOSSUS's influence over other researchers at the time was limited as its

existence remained classified for over 30 years. It contained more than 2,300

vacuum tubes, and had a unique design due to its intended purpose as a

code-breaker. It contained 5 different processing units, each of which could

read in and process 5,000 characters of code per second. Using COLOSSUS,

British Intelligence was able to decode Nazi military communications for

extended periods, providing invaluable support to Allied operations during the war.

ENIAC

ENIAC

|

At roughly the same time in the United States, physics professor John

Mauchly (1907-1980) and his graduate student J. Presper Eckert (1919-1995)

designed ENIAC, which was an acronym for Electronic Numerical Integrator And

Computer. The ENIAC was designed to compute ballistics tables for the U.S. Army,

but it was not completed until 1946. It consisted of 18,000 vacuum tubes and

1500 relays, weighed 30 tons, and consumed 140 kilowatts of power. In contrast

to the Mark I, the ENIAC could only store 20 numbers in memory, although more

than 100 constants could be entered using switches. Still, the ENIAC could

perform more complex calculations than the Mark I and operated up to 500 times

faster (5,000 additions per second). The ENIAC was programmable in that it

could be reconfigured to perform different computations. However, reprogramming

the machine required manually setting up to 6,000 multiposition switches and

reconnecting cables. In a sense, it was not so much reprogrammed as it was

rewired each time the application changed.

John von Neumann

John von Neumann

|

One of the scientists involved in the ENIAC project was John von Neumann

(1903-1957), who along with Turing is considered a founding father of the

field of computer science. Von Neumann recognized that programming via switches

and cables was tedious and error prone. As an alternative, he designed a

computer architecture in which the program could be stored in memory along

with the data. Although this idea was initially proposed by Babbage with his

Analytical Engine, von Neumann is credited with its formalization using modern

designs. Von Neumann also recognized the advantages of using binary (base 2)

representation in memory as opposed to decimal (base 10), which had been used

previously. His basic design was used in EDVAC (Eckert and Mauchly at UPenn,

1952), IAS (von Neumann at Princeton, 1952), and subsequent machines. The von

Neumann architecture is still the basis for nearly all modern computers.

As a result of von Neumann's "stored program" architecture, the process of

programming a computer became equally if not more important than designing a

computer. Before von Neumann, computers were not so much programmed as they

were hard-wired to a particular task. With the von Neumann architecture, a

program could be read in (via cards or tapes) and stored in the memory of the

computer. Reprogramming the computer did not require any reconfiguring, it

simply involved reading in and storing a new program for execution. At first,

programs were written in machine language, sequences of 0's and 1's that

corresponded to instructions directly executable by the hardware. While this

was an improvement over rewiring, it still required the programmer to write

and manipulate pages of binary numbers -- a formidable and error-prone task.

In the early 1950s, assembly languages were invented which simplified the

programmer's task somewhat by substituting simple mnemonic names for binary numbers.

The early 1950's also marked the birth of the commercial computer industry.

Eckert and Mauchly left the University of Pennsylvania to form their own company.

In 1951, The Eckert-Mauchly Computer Corporation (later a part of Remington-Rand,

then Sperry-Rand) began selling the UNIVAC I computer. The first UNIVAC I was

purchased by the U.S. Census Bureau, and a subsequent UNIVAC I captured the

public imagination when it was used by CBS to predict the 1952 presidential

election. Several other companies would soon follow Eckert-Mauchly with

commercial computers. It is interesting to note that at this time,

International Business Machines (IBM) was a small company producing card punches

and mechanical card sorting machines. It entered the computer industry late in

1953, and did not really begin its rise to prominence until the early 1960's.

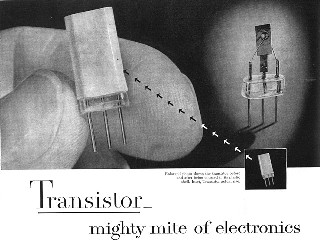

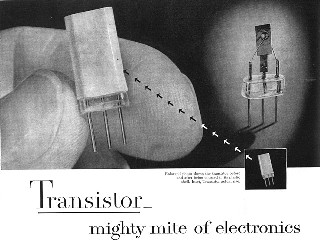

Generation 2: Transistors (1954-1963)

RCA transistor ad from Fortune

1953/3

RCA transistor ad from Fortune

1953/3

|

Vacuum tubes, in addition to being relatively large (several inches long),

dissipated an enormous amount of heat. Thus, they required lots of space for

cooling and tended to burn out frequently. The next improvement in computer

technology was the replacement of vacuum tubes with transistors. Invented by

John Bardeen, Walter Brattain, and William Shockley in 1948, a transistor is a

piece of silicon whose conductivity can be turned on and off using an electric

current. Transistors were much smaller, cheaper, more reliable, and more

energy-efficient than vacuum tubes. As such, they allowed for the design of

smaller and faster machines at drastically lower cost.

Transistors may be the most important technological development of the 20th

century. They allowed for the development of small and affordable electronic

devices: radios, televisions, phones, computers, etc., as well as the

information-based economy that developed along with them. The potential impact

of transistors was recognized almost immediately, earning Bardeen, Brattain,

and Shockley the 1956 Nobel Prize in physics. The first transistorized computers

were supercomputers commissioned by the Atomic Energy Commission for nuclear

research in 1956: Sperry-Rand's LARC and IBM's STRETCH. Companies such as IBM,

Sperry-Rand, and Digital Equipment Corporation (DEC) began marketing

transistor-based computers in the early 1960's, with the DEC PDP-1 and IBM

1400 series being especially popular.

Rear Admiral Grace Murray-Hopper

Rear Admiral Grace Murray-Hopper

|

As transistors drastically reduced the cost of computers, even more attention

was placed on programming. If computers were to be used by more than just the

engineering experts, programming would have to become simpler. In 1957, John

Backus (1924-) and his group at IBM introduced the first high-level programming

language, FORTRAN (FORmula TRANslator). This language allowed the programmer to

work at a higher level of abstraction, programming via mathematical formulas as

opposed to assembly level instructions. Programming in a high-level language

like FORTRAN greatly simplified the task of programming, although IBM's original

claims that FORTRAN would "eliminate coding errors and the debugging process"

were a bit optimistic. Other high-level languages were developed in this

period, including LISP (John McCarthy at MIT, 1959), BASIC (John Kemeny at

Dartmouth, 1959), and COBOL (Grace Murray-Hopper at the Department of

Defense, 1960).

The 1960's saw the rise of the computer industry, with IBM becoming a major

force. The success of IBM is not so much attributed to superior technology, but

more to its shrewd business sense and effective long-range planning. While

smaller companies like DEC, CDC, and Burroughs may have had more advanced

machines, IBM won market share through aggressive marketing, reliable customer

support, and a strategy of designing an entire family of compatible,

interchangeable machines.

Generation 3: Integrated Circuits (1963-1973)

integrated circuits

integrated circuits

|

Transistors were far superior to the vacuum tubes they replaced in many ways:

they were smaller, cheaper to mass produce, and more energy-efficient. However,

early transistors still generated a great deal of heat, which could damage

other components inside the computer. In 1958, Jack Kilby (1923-) at Texas

Instruments developed a technique for manufacturing transistors as layers of

conductive material on a silicon disc. This new form of transistor could be

made much smaller than existing, free-standing transistors, and also generated

less heat. Thus, tens or even hundreds of transistors could be layered onto the

same disc and connected with conductive layers to form simple circuits. Such a

disc, packaged in metal or plastic with external pins for connecting with other

components, is known as an Integrated Circuit (IC) or IC chip. Packaging

transistors and related circuitry on an IC that could be mass-produced made

it possible to build computers that were smaller, faster, and cheaper. A

complete computer system could be constructed by mounting a set of IC chips

(and other support devices) on circuit boards and soldering them together.

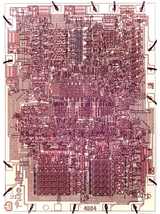

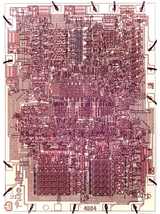

Intel 4004

Intel 4004

|

As manufacturing technology improved, the number of transistors that could be

fit on a single chip increased. In 1965, Gordon Moore (1929-) noticed that the

number of transistors that could fit on a chip roughly doubled every 12 to 18

months. This trend became known as Moore's Law, and has continued to be an

accurate predictor of technological advances. By the 1970's, the Large Scale

Integration (LSI) of thousands of transistors on a single IC chip became

possible. In 1971, the Intel Corporation made the logical step of combining

all of the control circuitry for a calculator into a single chip called a

microprocessor. The Intel 4004 microprocessor contained more than 2,300

transistors. Its descendant, the 8080, which was released in 1974, contained

6,000 transistors and was the first general-purpose microprocessor, meaning

that it could be programmed to serve various functions. The Intel 8080 and

its successors, the 8086 and 8088 chips, served as central processing units

for numerous personal computers in the 1970's, including the IBM PC. Other

semiconductor vendors such as Texas Instruments, National Semiconductors,

Fairchild Semiconductors, and Motorola also produced microprocessors in the 1970's.

As was the case with previous technological advances, the development of

integrated circuits led to faster and cheaper computers. Whereas computers in

the early 1960's were affordable only for large corporations, IC technology

rapidly brought down costs to the level where small businesses could afford

them. As such, more and more people needed to be able to interact with computers.

A key to making computers easier for non-technical users was the development of

operating systems, master control programs that oversee the operation of the

computer and manage peripheral devices. An operating system acts as an interface

between the user and the physical components of the computer, and through a

technique known as time-sharing, can even allow multiple users to share the same

computer simultaneously. In addition to operating systems, specialized

programming languages were developed to fill the needs of the broader base

of users. In 1971, Niklaus Wirth (1934-) developed Pascal, a simple language

designed primarily for teaching but which has dedicated users to this day.

In 1972, Dennis Ritchie (1941-) developed C, a systems language used in the

development of the UNIX operating system. Partly because of the success of UNIX,

C and its descendant C++ have become the industry standards for systems

programming and software engineering.

Generation 4: VLSI (1973-1985)

| Year | Processor | # Transistors

|

| 2000 | Pentium 4 | 42,000,000

|

| 1999 | Pentium III | 9,500,000

|

| 1997 | Pentium II | 7,500,000

|

| 1993 | Pentium | 3,100,000

|

| 1989 | 80486 | 1,200,000

|

| 1985 | 80386 | 275,000

|

| 1982 | 80286 | 134,000

|

| 1978 | 8088 | 29,000

|

| 1974 | 8080 | 6,000

|

| 1972 | 8008 | 3,500

|

| 1971 | 4004 | 2,300

Intel Family of IC Chips

|

While the previous generations of computers were defined by new technologies,

the jump from generation 3 to generation 4 is largely based upon scale. By the

mid 1970's, advances in manufacturing technology led to the Very Large Scale

Integration (VLSI) of hundreds of thousands and eventually millions of

transistors on an IC chip. As an example of this, consider the table to the

left that shows the number of transistors on chips in the Intel family. It is

interesting to note that the first microprocessor, the 4004, had approximately

the same number of switching devices (transistors) as the COLOSSUS, which

utilized vacuum tubes in 1943. Throughout the 1970's and 1980's, successive

generations of IC chips added more and more transistors, providing more

complex functionality in the same amount of space. It is astounding to note

that the Pentium 4, which was released in 2000, has more than 42 million

transistors, with individual transistors as small as 0.18 microns

(0.00000000018 meters).

As the mass production of microprocessor chips became possible in the 1970's

with VLSI, the cost of computers dropped to the point where a single individual

could afford one. The first personal computer (PC), the MITS Altair 8800, was

marketed in 1975 for under $500. In reality, the Altair was a computer kit that

included all of the necessary electronic components, including the 8080

microprocessor that served as the central processing unit for the machine.

The customer was responsible for wiring and soldering all of these components

together to assemble the computer. Once assembled, the Altair had no keyboard,

no monitor, and no permanent storage. The user entered instructions directly by

flipping switches on the console, and viewed output as blinking lights. Despite

these limitations, demand for the Altair was overwhelming.

Jobs & Wozniak

Jobs & Wozniak

|

While the company that sold the Altair, MITS, would fold within a few years,

other small computer companies successfully charted the PC market in the late

1970's. In 1976, Steven Jobs (1955-) and Stephen Wozniak (1950-) got their

start selling computer kits similar to the Altair, which they called the Apple.

In 1977, they founded Apple Computer and began marketing the Apple II, the first

pre-assembled personal computer with a keyboard, color monitor, sound, and

graphics. By 1980, the annual sales of their Apple II personal computer had

reached almost $120 million. Other companies, such as Tandy, Amiga, and

Commodore soon entered the market with personal computers of their own.

IBM, which had been slow to enter the personal computer market, introduced the

IBM PC in 1980, becoming an immediate force. Apple countered with the Macintosh

in 1984, which introduced the now familiar graphical user interface of windows,

icons, and a mouse.

Bill Gates

Bill Gates

|

As computers became the tools of more and more individuals, the software

industry grew and adapted. Bill Gates (1955-) is credited with writing the

first commercial software for personal computers, a BASIC interpreter for the

Altair. In 1975, while a freshman at Harvard, he cofounded Microsoft along with

Paul Allen (1955-) and helped to build that company into the software giant

that it is today. Much of Microsoft's initial success was due to its marketing

of the MS-DOS operating system for PCs, and applications programs such as word

processors and spreadsheets. By the mid 1990's, Microsoft Windows had become

the dominant operating system for desktop computers, and Bill Gates, in his

early 40's, had become the richest person in the world.

The proliferation of programming languages continued in the 1980's and

beyond. In 1980, Alan Kay (1940-) developed Smalltalk, the first object-oriented

language. Ada was developed for the Department of Defense in 1980, to be used

for all government contracts. In 1985, Bjarne Stroustrup (1950-) developed C++,

an object-oriented extension of C. C++ and its offshoot Java (developed in 1995

at Sun Microsystems) have become the dominant languages in software engineering

today.

Generation 5: Parallel Processing & Networking (1985-????)

Deep Blue defeats Kasparov

Deep Blue defeats Kasparov

|

While generations 0 through 4 are reasonably well-defined, the scope of the

fifth generation of computer technology is still an issue of debate. What is

clear is that computing in the late 1980's and beyond has been defined by

advances in parallel processing and networking. Parallel processing refers

to the integration of multiple (sometimes hundreds or thousands) processors

in a computer. By sharing the computational load across multiple processors,

a parallel computer is able to execute programs in only a fraction of the time

required by a single processor computer. A striking example of this is IBM's

Deep Blue, a parallel processing computer specifically designed for playing

chess. Using 256 processors, Deep Blue is able to evaluate millions of potential

moves in a second and thus select the most promising move. In 1997, Deep Blue

became the first computer to defeat the reigning world's chess champion in

tournament play.

Up until the 1990's, most computers were stand-alone devices. Small-scale

networks of computers were common in large businesses, but communication

between networks was rare. The idea of a large-scale network, connecting

computers from remote sites, traces back to the 1960's. The ARPAnet,

connecting four computers in California and Utah, was founded in 1969.

However, its use was mainly limited to government and academic researchers.

As such, the ARPAnet, or Internet as it would later be called, grew at a slow

but steady pace. That changed in the 1990's with the development of the World

Wide Web. The Web, a multimedia environment in which documents can be

seamlessly linked together, was introduced in 1989 by Tim Berners-Lee, but

first became popular in the mid 1990's with the development of graphical Web

browsers. As of 2002, it is estimated that there are more than 160 million

computers connected to the Internet, of which more than 33 million are Web

servers storing up to 5 billion individual Web pages. For more details on

the development of the Internet and Web, refer to Chapter 3.

(Optional) Supplementary Readings

Review Questions

Answers to be submitted to your instructor via email by

Thursday at 9 a.m.

- Jacquard's loom, while unrelated to computing, was influential in the development of modern computing devices. What design features of that machine are relevant to the design of modern computers?

- What role did Herman Hollerith play in the history of computer technology?

- What advantages did vacuum tubes have over electromagnetic relays?

- One advantage of the von Neumann architecture over previous computer architectures was that it was a stored-program machine. Describe how such a computer could be programmed to perform different computations without physically rewiring it. co

- What is a transistor and how did the introduction of transistors lead to faster and cheaper computers?

- What is Moore's Law?

- What does the acronym VLSI stand for?

- What was the first personal computer and when was it first marketed?

- How much did you know about the history of computers before reading this chapter? Did you find it informative?

- What did you find most interesting in reading this chapter?

- What did you find most confusing in reading this chapter?